The term bot is frequently used throughout the internet and is ultimately used to describe a computer program that automates actions or tasks over the Internet. While a bot is not inherently good or bad they will fall into either of the two categories, depending upon whether they are used with good or bad intent.

Good Bots

A good bot is used to describe a bot that performs useful or helpful tasks that aren’t detrimental to a user’s experience on the Internet. There are many bots which are deemed good, for example:

- Search Engine Bots: These bots often referred to as web crawlers or spiders, and are operated by big search engines like Google or Bing.

- Site Monitoring Bots: These bots monitor website metrics – for example, monitoring for backlinks or system outages – and can alert users of major changes or downtime. These bots are operated by many website like Hetrixtools, UptimeRobot or Cloudflare.

- Feed Bots: These bots crawl around the internet looking for content to add to a platform’s news feed and are operated by aggregator sites or social media networks.

- Personal Assistant Bots: Although these bots or programs are more advanced than a typical bot, they are bots nonetheless. They are computer programs that browse the internet looking for data that matches a search and are operated by Apple (Siri) or Google (Alexa).

Bad Bots

A bad bots is used to describe a bot that perform malicious acts, steal data, or damages server, networks or websites. They can be employed to perform distributed denial of service (DDoS) attacks or used to scan servers, networks or websites looking for exploits, that can be used to compromise these systems.

We have seen bad bots become a significantly bigger problem over the last few years for both server administrators and website owners. These bots often target a server or website, performing thousands of requests and collecting huge amounts of data in a particularly short period of time.

These requests can cause significant spikes in resource usage of a server or website, affecting its performance, ultimately causing it to become slower for normal visitors. In some cases the higher load from more aggressive bots can cause a server or website to become less stable, which in turns can cause websites to become unresponsive or worse crash totally.

These bots don’t generate good traffic towards your server or website and as well as the resources usage, they will as much of the available bandwidth as they can. They are also often used to scan servers, networks or websites to find exploits, that can be used to compromise those later.

Blocking Techniques

Fortunately, there are several techniques we can use for blocking bad bots. On a website level we can employ rules within the .htaccess file to block these bots using the HTTP_USER_AGENT header and rewriting the rule to display a 403 Forbidden response. An example of the .htaccess rule would look like this:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT (360Spider|AhrefsBot|Bandit) [NC]

RewriteRule .* - [F,L] Using the .htaccess file method is sufficient if the list of bad bots you want to block is small. However, at the last count there was 578 active bad bots currently scanning the internet.

In this guide, we will show you how to block bad bots, crawlers and scrapers from accessing your DirectAdmin server by implementing a block rule using ModSecurity. This guide assumes you have already installed ModSecurity. If you haven’t, you can follow our How to Install ModSecurity with OpenLiteSpeed and DirectAdmin guide.

Note

If you don’t have root access to your server in order to modify the ModSecurity configuration files, we highly recommend you take a look at using the 7G Firewall produced by Jeff Starr at Perishable Press.

By default the modsecurity.conf file is set to include all rule sets and configuration files located in the /etc/modsecurity.d/ folder. However, if we included the rule set directly into this folder it would be overwritten by custombuild when a ModSecurity rebuild command was issued. We can utilise the facility built into custombuild to enable the custom rule set to be added to the /etc/modsecurity.d/ folder through the custom configuration option.

Firstly, we will need to create a custom ModSecurity folder within custombuild directory using the following command.

cd /usr/local/directadmin/custombuild mkdir -p custom/modsecurity/conf

Now we will need to create 00_bad_bots.conf file in the ModSecurity folder using the following command.

nano -w /usr/local/directadmin/custombuild/custom/modsecurity/conf/00_bad_bots.conf

In 00_bad_bots.conf file you will need to paste the ModSecurity rule using the following command.

# BLOCK BAD BOTS SecRule REQUEST_HEADERS:User-Agent "@pmFromFile bad_bot_list.txt" "phase:2,t:none,t:lowercase,log,deny,severity:2,status:406,id:1100000,msg:'Custom WAF Rules: WEB CRAWLER/BAD BOT'"

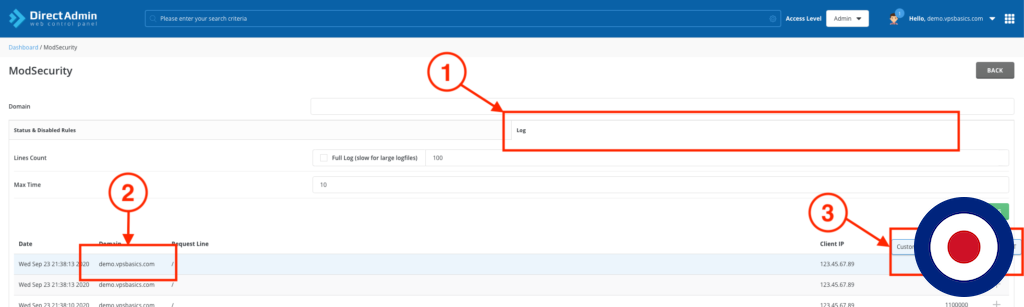

The above rule will block any bot listed in the bad_bot_list.txt file with a 406 Not Acceptable response. The block will be listed under rule ID1100000 and the message will say Custom WAF Rules: WEB CRAWLER/BAD BOT. We have used a high rule ID to prevent any conflict with your existing ModSecurity rules. Any violations of the rule will show in the ModSecurity log in the DirectAdmin dashboard.

Next we will need to create the bad_bot_list.txt file. The bad bot list we will be using has been curated by Mitchell Krog (mitchellkrogza) and is part of wider project by Mitchell called Apache Ultimate Bad Bot Blocker. At the time of writing this guide the current version is 3.2020.08.1192 with the last commit on 7th August 2020. Create the bad_bot_list.txt file using the following command.

nano -w /usr/local/directadmin/custombuild/custom/modsecurity/conf/bad_bot_list.txt

In the bad_bot_list.txt file simply copy and paste the Bad Bot List and then save the bad_bot_list.txt file.

Note

If you want to have a more targeted approach to blocking the Bad Bots, you can customise the list to your likely i.e. add or remove. If you wanted to target just one bad bot such as AhrefsBot, then you could just add that one to the list and build accordingly.

Now you will need to rebuild the Modsecurity rules and rewrite website configurations using the following commands.

./build modsecurity_rules ./build rewrite_confs

After the ModSecurity rebuild has been complete you can check to ensure the files have been copied over to the /etc/modsecurity.d/ folder using the following command.

ls -la /etc/modsecurity.d/

[demo@vpsbasics] # ls -la /etc/modsecurity.d/ -rw-r--r-- 1 root root 222 Sep 23 20:30 00_bad_bots.conf -rw-r--r-- 1 root root 5534 Sep 23 20:10 bad_bot_list.txt

Test Bad Bot Blocking

Now you can test the bad bot blocking is being implemented in ModSecurity correctly using the following commands.

curl -A "AhrefsBot" https://example.com curl -A "ahrefsbot" https://example.com

Note

Don’t forget to change https://example.com to your own domain you want to test.

You will get 406 Not Acceptable response. You will also notice that if a bot such as AhrefsBot changed its name to ahrefsbot, it will still be detected as the Modsecurity rule is case insensitive and will also record partial matches, for example ahrefs.

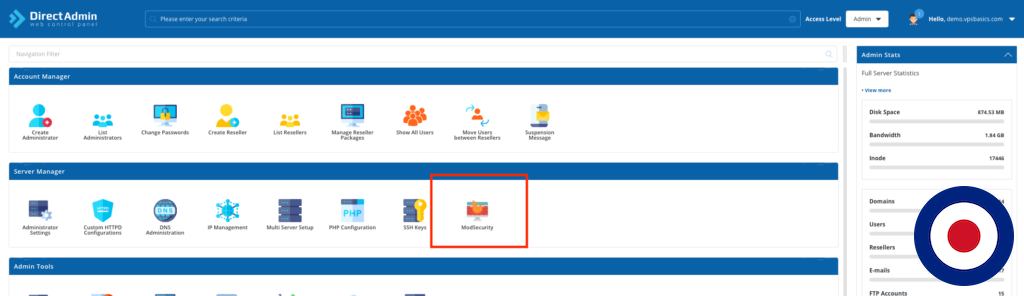

You can check the ModSecurity log by logging into DirectAdmin. Once in the main dashboard select the ModSecurity icon under Server Manager.

In the ModSecurity dashboard select Log from the tabbed menu. Under the domain section you will see the domain used in the curl test above. To the right you will see the rule ID1100000 has been blocked.

That’s it. You have successfully implemented ModSecurity rule to block bad bots, crawlers and scrapers from accessing your DirectAdmin server.

2 Comments for How to Block Bad Bots using ModSecurity with DirectAdmin

Great effort! Great article!

Thanks for the time and effort you put into this.

Hi Abubakar

Thanks for the feedback and glad you found the guide useful.

Kind Regards

VPSBasics